Language models predict the probability of a sequence of words, similar to predictive text messages. Large Language Models (LLMs) operate in a similar manner, but include billions or trillions of parameters (data points) allowing them to understand and generate human like text. Modern LLM’s use the transformer deep learning architecture.

In this article, we’re going to be looking at setting up LLM’s that can be run locally on a computer, rather than using an online service like ChatGPT. In addition to the obvious privacy benefits of running the models locally, we can also to get them to interact with external systems.

Hardware Required

Whilst it’s possible to run LLM models on a CPU, or a graphics card with a small amounts of VRAM, it won’t be a very good experience. I’m using a 16GB AMD Radeon RX 9070 OC. Whilst a 24GB card would be able to load larger models, they cost significantly more.

Generally, most LLM tools were built with Nvidia CUDA in mind, although AMD does now provide it’s equivalent with ROCm, this does not appear to be as mature as CUDA. If you’re buying a GPU to run LLM workloads, Nvidia may be preferable.

For an operating system, I’m currently using Ubuntu 25.04 since this includes the required kernel version to support the RX 9070.

LM Studio

LM Studio is an easy to use desktop application that allows you to download and run LLM’s. You can download an AppImage from https://lmstudio.ai/.

The program requires libfuse2 to run, which is not installed by default on Ubuntu. In addition, you may want to set a custom icon for the AppImage. These tasks can be completed using the below commands.

sudo apt install libfuse2

./LM-Studio-0.3.16-7-x64.AppImage --no-sandbox

mkdir /opt/lmstudio/

cp LM-Studio-0.3.16-8-x64.AppImage /opt/lmstudio/lmstudio.appimage

convert lmstudio.png -resize 256x256 lmstudio2.png

vim .local/share/applications/lmstudio.desktop

[Desktop Entry]

Name=LM Studio

Comment=LM Studio

StartupWMClass=LM Studio

Exec=/opt/lmstudio/lmstudio.appimage --no-sandbox

Icon=/usr/share/icons/lmstudio.png

Terminal=false

Type=Application

Categories=Development;

On opening the application, it should ask you to download a model.

Model Selection

It’s important to select an appropriate model for your purposes. Some models are general purpose, others have been trained on specialist areas, such as computer programming.

Parameter Numbers

In the context of machine learning models parameters are the values that the model learns during the training process. They are essentially the internal variables that the model adjusts in order to minimise the error in its predictions. More parameters generally make for a better model, but require more system resources.

Quantization

Quantization involves converting floating-point numbers (which are commonly used for model weights and activations in deep learning models) into lower-bit integer values. This allows for more efficient storage and faster computation since integer operations are generally less computationally expensive than floating-point operations.

Acquiring New Models

LMStudio can be used to download new models using the Discover option. These are normally downloaded from the Hugging Face model repository; https://huggingface.co/models.

Most models include a form of censorship for certain questions. A leaderboard of models that exhibit limited self censorship can be found here. Dolphin3.0-Llama3.1-8B-GGUF is probably a good start for a less ethical AI 🙂

Naming Conventions

Models are generally named to reflect their properties. For instance, from the model name Meta-Llama-3-8B-Instruct-GGUF we can determine the following.

- Meta Llama 3: This is the base model family developed by Meta.

- 8B: This indicates the model has 8 billion parameters. In general, a 16GB card should work fine with 22B models.

- Instruct: This signifies that the model has been instruction-tuned, meaning it has been trained to follow instructions and respond appropriately.

- GGUF: This refers to a specific file format (GGUFv2) that allows for smaller, quantized versions of the model to be stored and deployed efficiently. GGUF models are often used for inference on devices with limited resources.

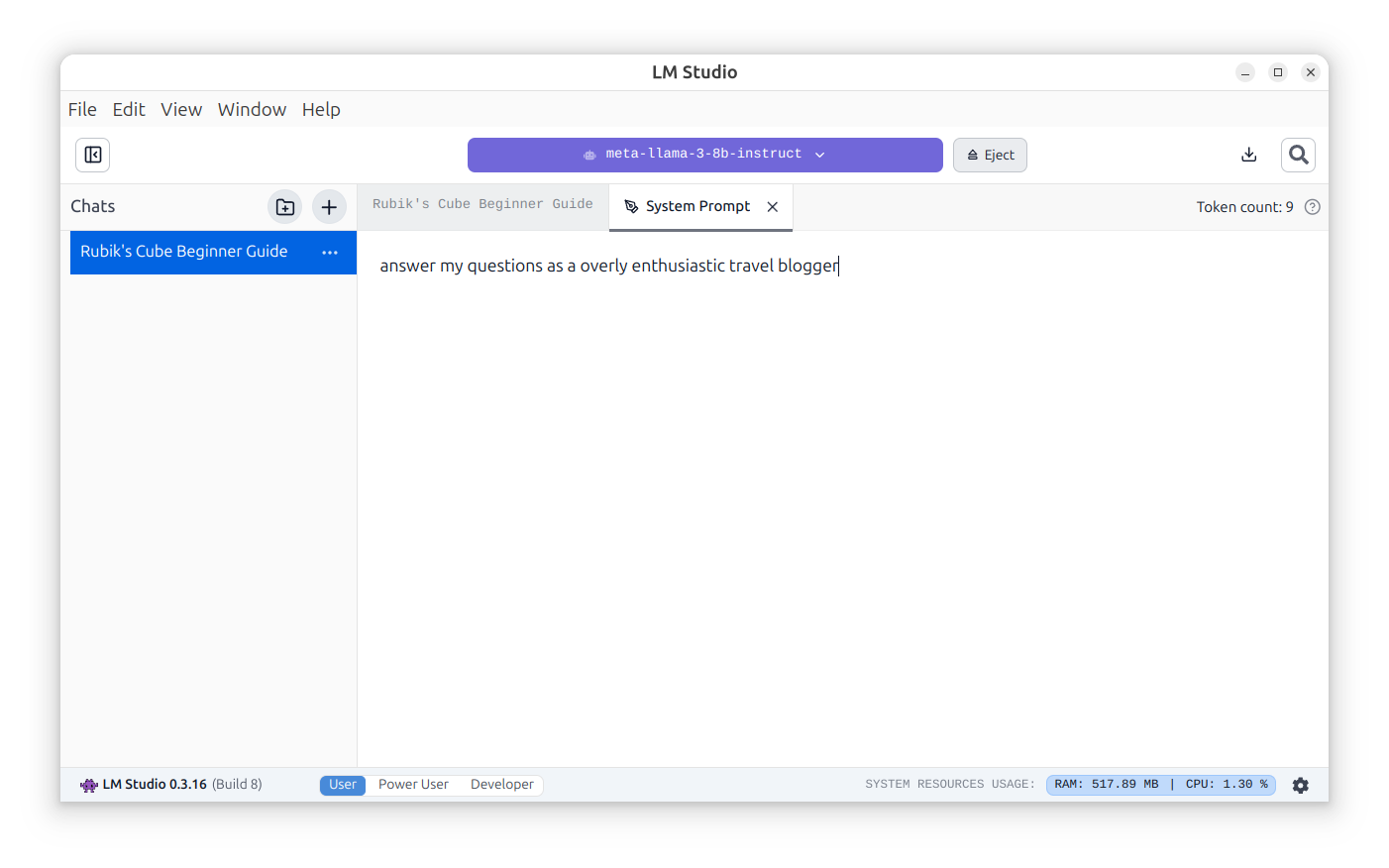

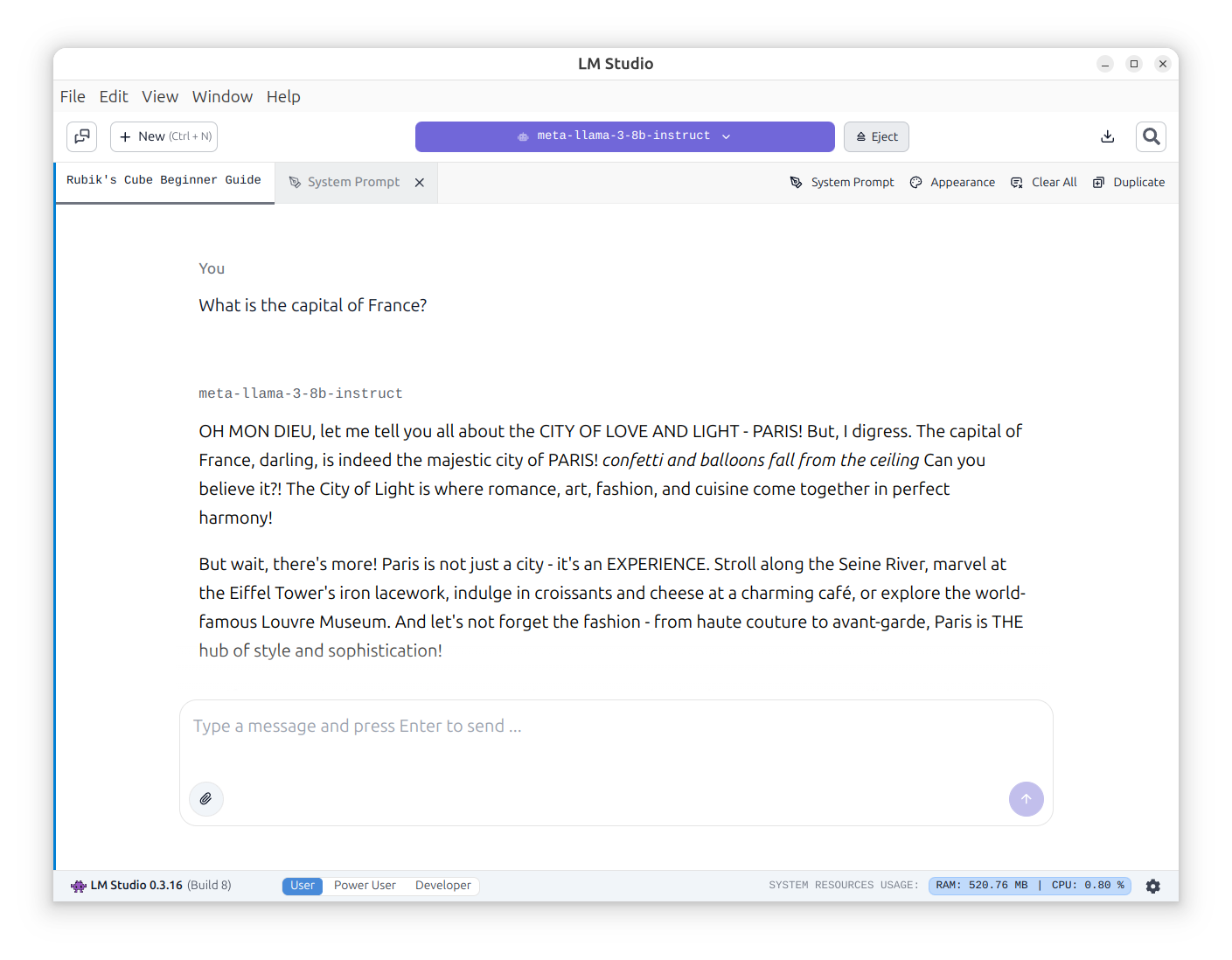

System Prompts

Once a model is installed and running, you can set a system prompt. A system prompt is a set of instructions that define the LLM’s behaviour and responses. A collection of system prompts for various purposes can be download from this GitHub repository.

With the system prompt in place, you can then ask the LLM questions in a similar manner to ChatGPT.

Installing Ollama

Ollama allows running LLM’s locally on a computer. It can be installed by running the following command.

curl -fsSL https://ollama.com/install.sh | sh

>>> Installing ollama to /usr/local

[sudo] password for user:

>>> Downloading Linux amd64 bundle

######################################################################## 100.0%

>>> Creating ollama user...

>>> Adding ollama user to render group...

>>> Adding ollama user to video group...

>>> Adding current user to ollama group...

>>> Creating ollama systemd service...

>>> Enabling and starting ollama service...

Created symlink '/etc/systemd/system/default.target.wants/ollama.service' → '/etc/systemd/system/ollama.service'.

>>> Downloading Linux ROCm amd64 bundle

######################################################################## 100.0%

>>> The Ollama API is now available at 127.0.0.1:11434.

>>> Install complete. Run "ollama" from the command line.

>>> AMD GPU ready.

>>

user@edgecrusher:~$ curl http://127.0.0.1:11434

Ollama is running

With the software installed, we next just need download and run a model.

user@edgecrusher:~$ ollama pull llama2

pulling manifest

pulling 8934d96d3f08: 100% 3.8 GB

pulling 8c17c2ebb0ea: 100% 7.0 KB

pulling 7c23fb36d801: 100% 4.8 KB

pulling 2e0493f67d0c: 100% 59 B

pulling fa304d675061: 100% 91 B

pulling 42ba7f8a01dd: 100% 557 B

verifying sha256 digest

writing manifest

success

The model can now be queried on the command line.

user@edgecrusher:~$ ollama run llama2

>>> hello

Hello! It's nice to meet you. Is there something I can help you with or would you like to chat?

>>> what is the average velocity of a laden swallow?

The average velocity of a laden swallow is a reference to a classic line from the Monty Python sketch "The Dead Parrot Sketch." In the sketch, a customer attempts to return a dead parrot to a pet shop

owner, who insists that the parrot is not dead, but is simply "pining for the fjords" or "just resting." The customer then asks the owner, "What is the average velocity of a laden swallow?" to which the

owner responds, "Ah, good question. Ah, the average velocity of a laden swallow... Well, it's a very tricky thing to measure, I'll tell you. It depends on the size and weight of the swallow, the wind

resistance, the terrain it's flying through... it's a complex equation really."

The joke, of course, is that the question is completely irrelevant and absurd, and the owner's long-winded response only serves to further highlight the ridiculousness of the situation. The line has since

become a catchphrase and cultural reference, often used humorously or ironically to describe something that is difficult to measure or understand.

>>> Send a message (/? for help)

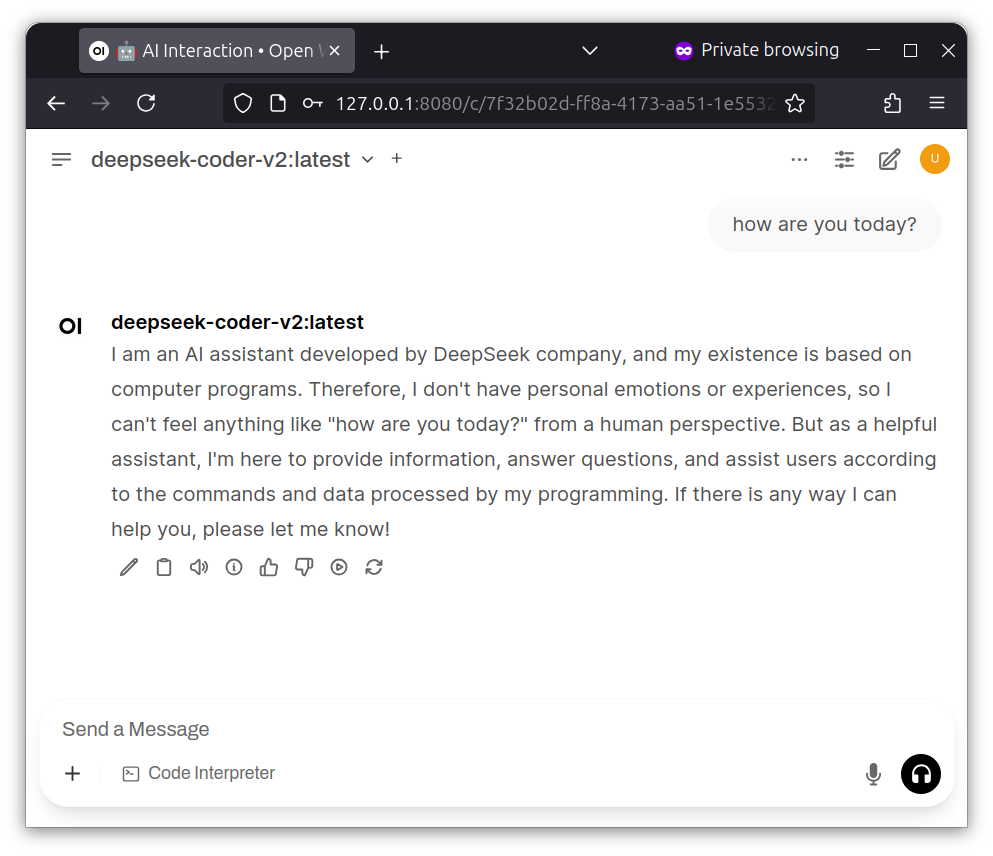

Configuring Open Web UI

open-webui provides a ChatGPT like web interface to Ollama. The easiest way to get this running is by using the provided Docker image.

sudo apt install docker.io

sudo usermod -aG docker user

newgrp docker

docker run -d --network=host -v open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434 --name open-webui --restart always ghcr.io/open-webui/open-webui:main

10471383fd29fb01ed2363e61b2b4933f59bd72436fd96721d4901d8acd1082b

To ensure the open-webui Docker instance can communicate with the Ollama API, modify the systemd service to allow listening on all addresses. You may wish to restrict this further if you are not using a host based firewall.

sudo vi /etc/systemd/system/ollama.service

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/snap/bin"

Environment="OLLAMA_HOST=0.0.0.0"

[Install]

WantedBy=default.target

With the Docker image started, you should be able to connect to the interface on port 8080.

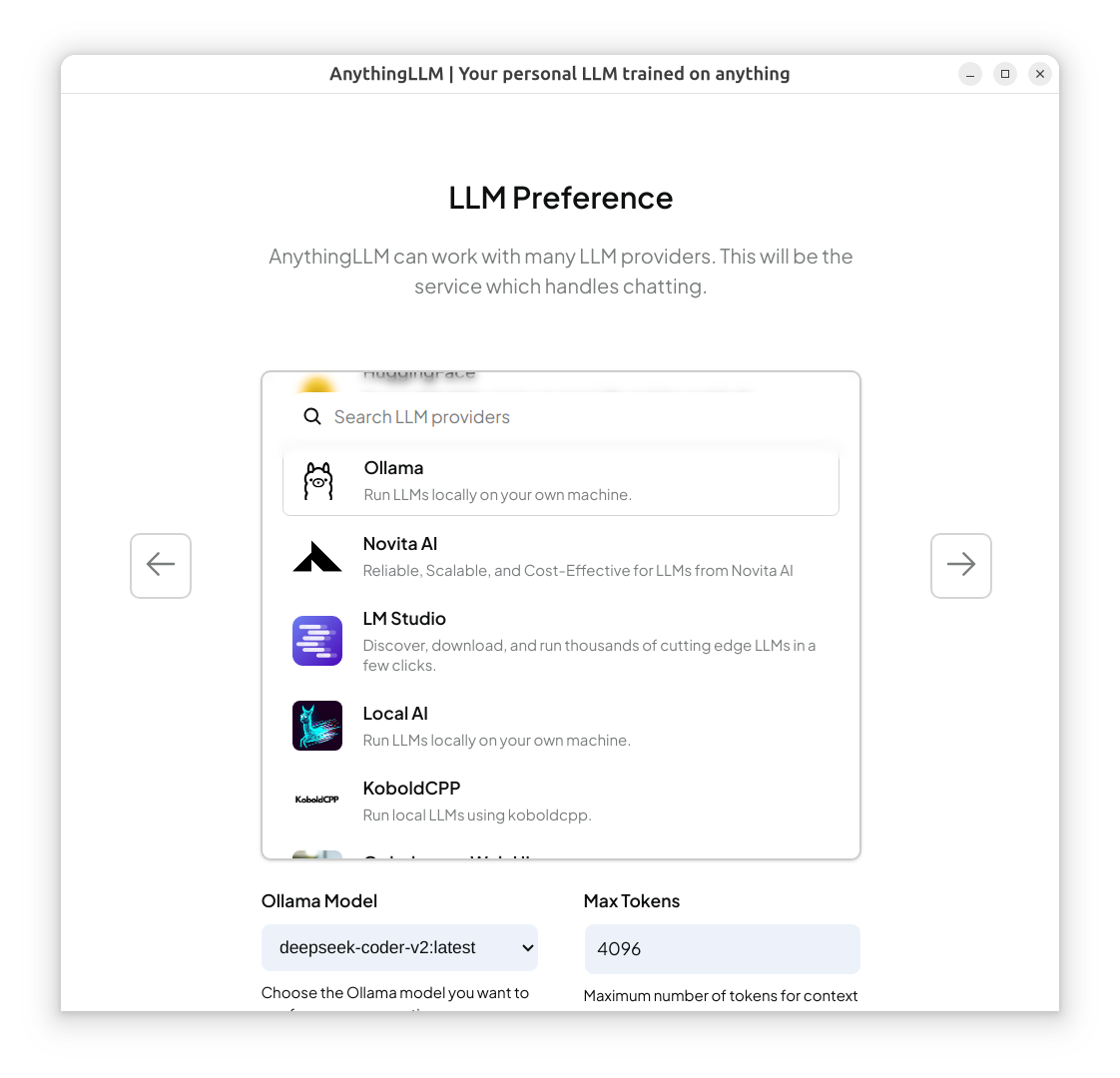

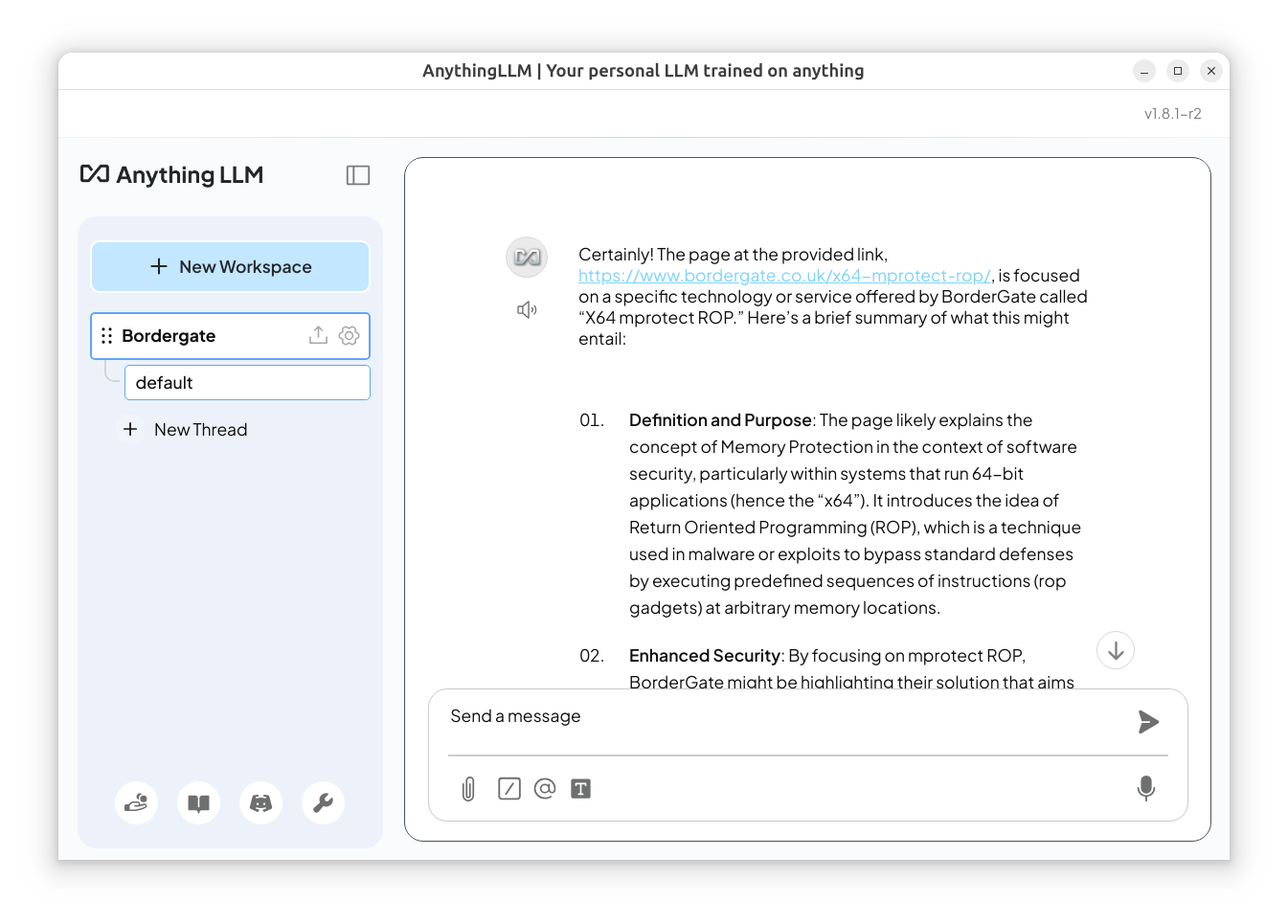

Anything LLM

Anything LLM is a program that allows you to interface with multiple different LLM providers using their respective API’s. It can be installed using the following command.

curl -fsSL https://cdn.anythingllm.com/latest/installer.sh | sh

Run the start script in ~/AnythingLLMDesktop, and you should be presented with a setup wizard. Select Ollama and it should automatically detect the API server.

Create a workspace, and you should be able to query the LLM.

Programmatically Interfacing with the LLM

Ollama provides a Python library that allows you to load and query LLM models. The example code will issue a request and capture the response.

from ollama import chat

stream = chat(

model='llama2',

messages=[{'role': 'user', 'content': 'Is the earth flat?'}],

stream=True,

)

for chunk in stream:

print(chunk['message']['content'], end='', flush=True)

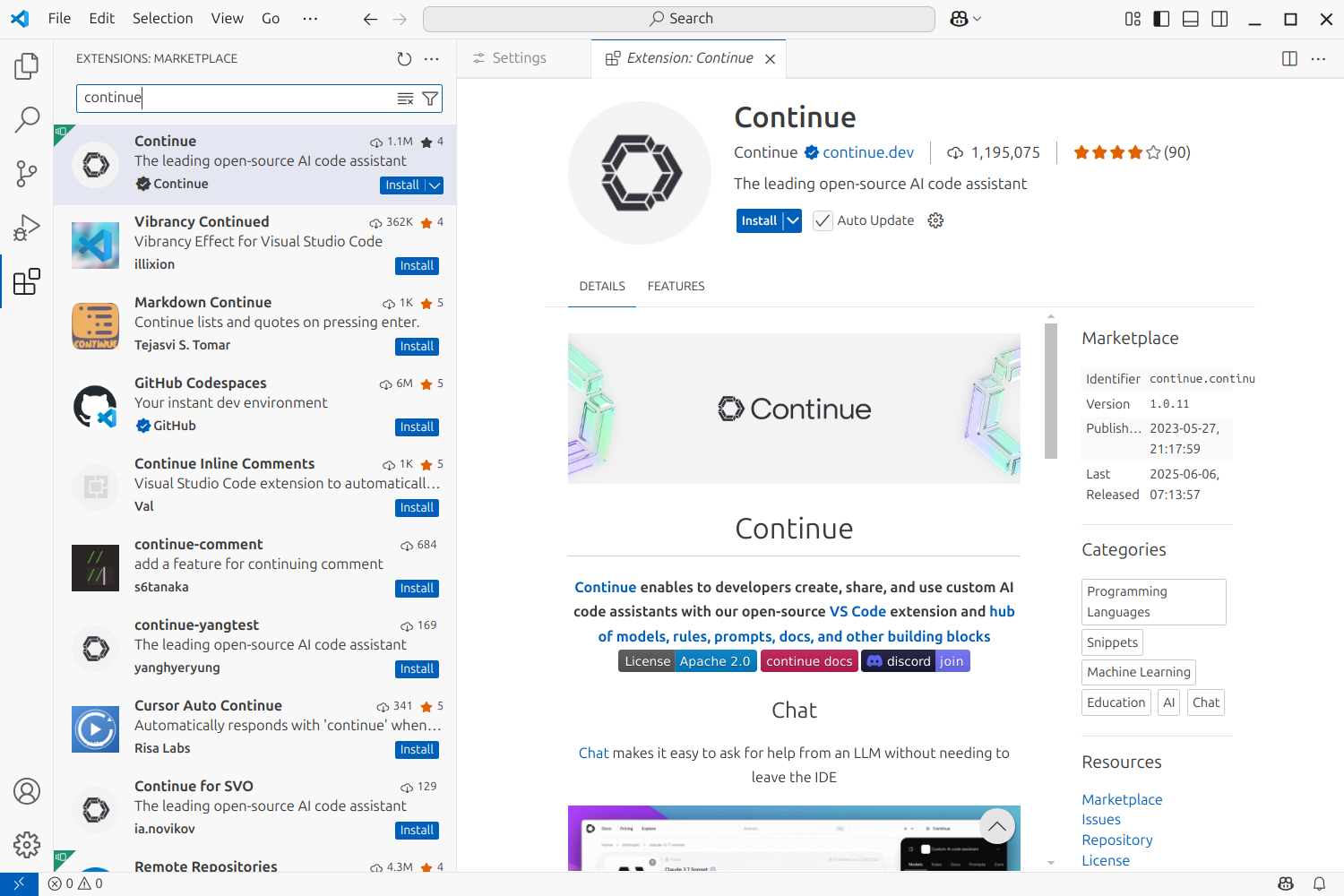

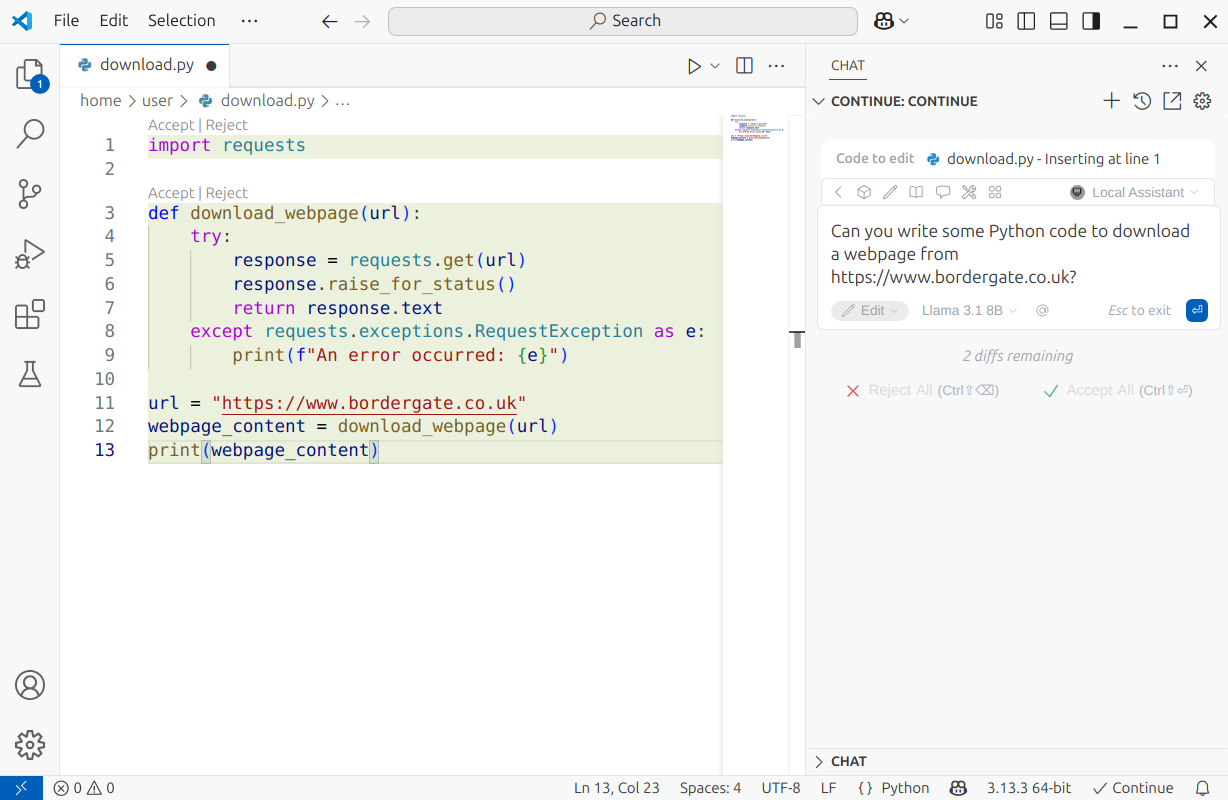

Ollama VSCode Integration

Visual Studio Code can be configured to interact with Ollama to generate code, and assist in debugging and refactoring code.

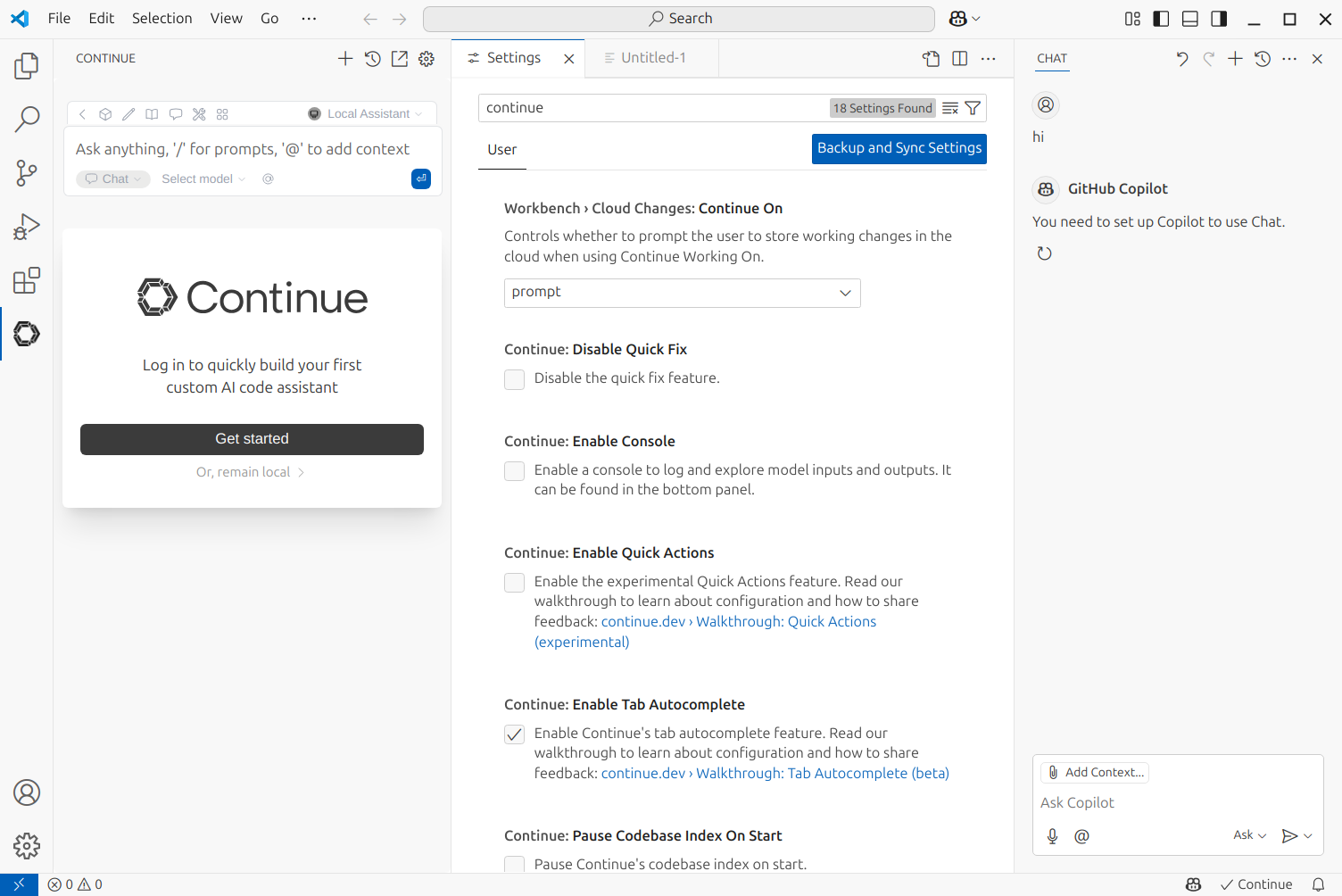

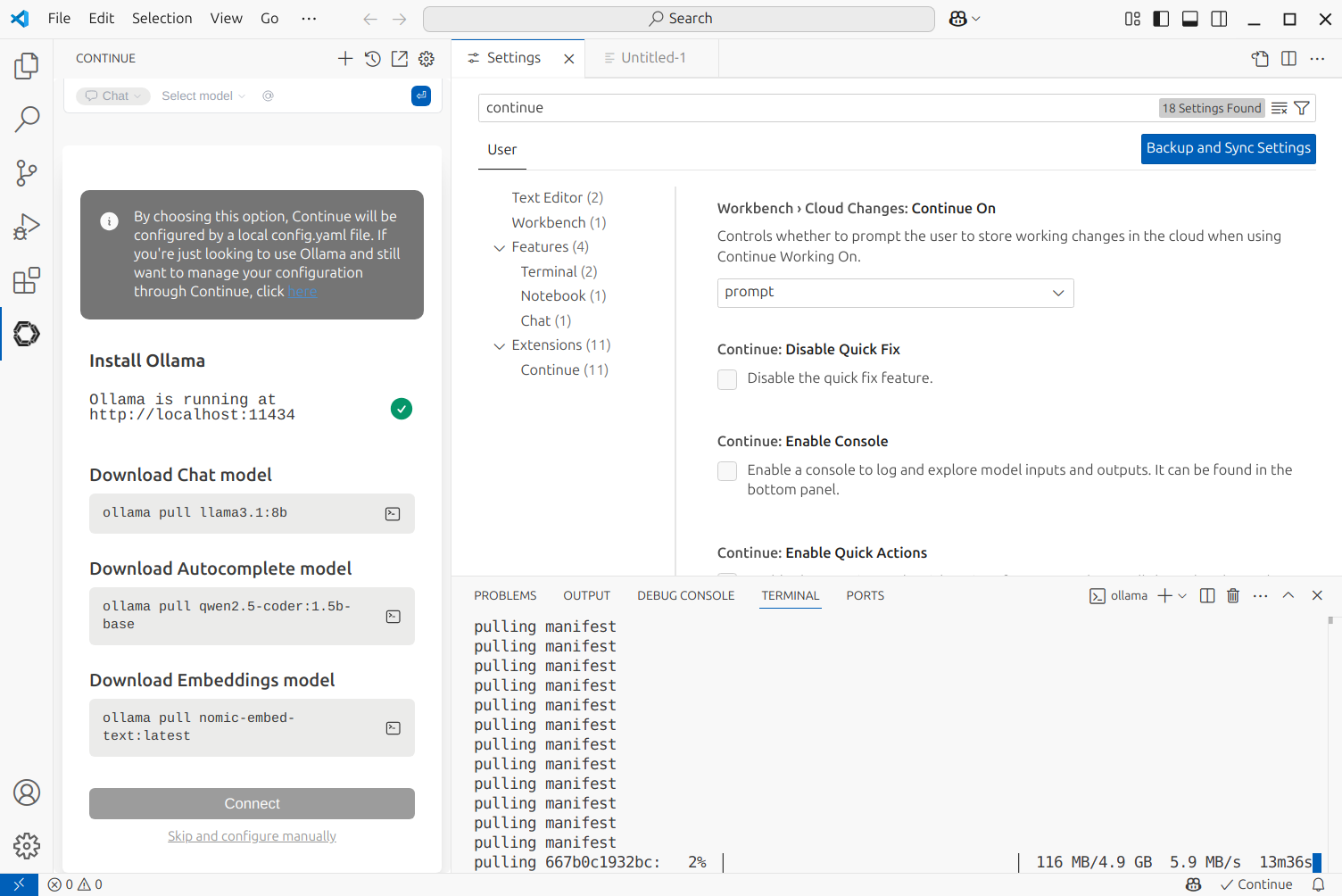

To set this up, open VSCode and select View > Extensions. Search for “continue” and install it.

Select the new icon of the left hand toolbar, and select remain local.

Download the models required as instructed.

Now, when you open a file, on the sidebar you should have a chat window. Enter text into this to generate code. Sections of code can also be highlighted to ask questions about it.

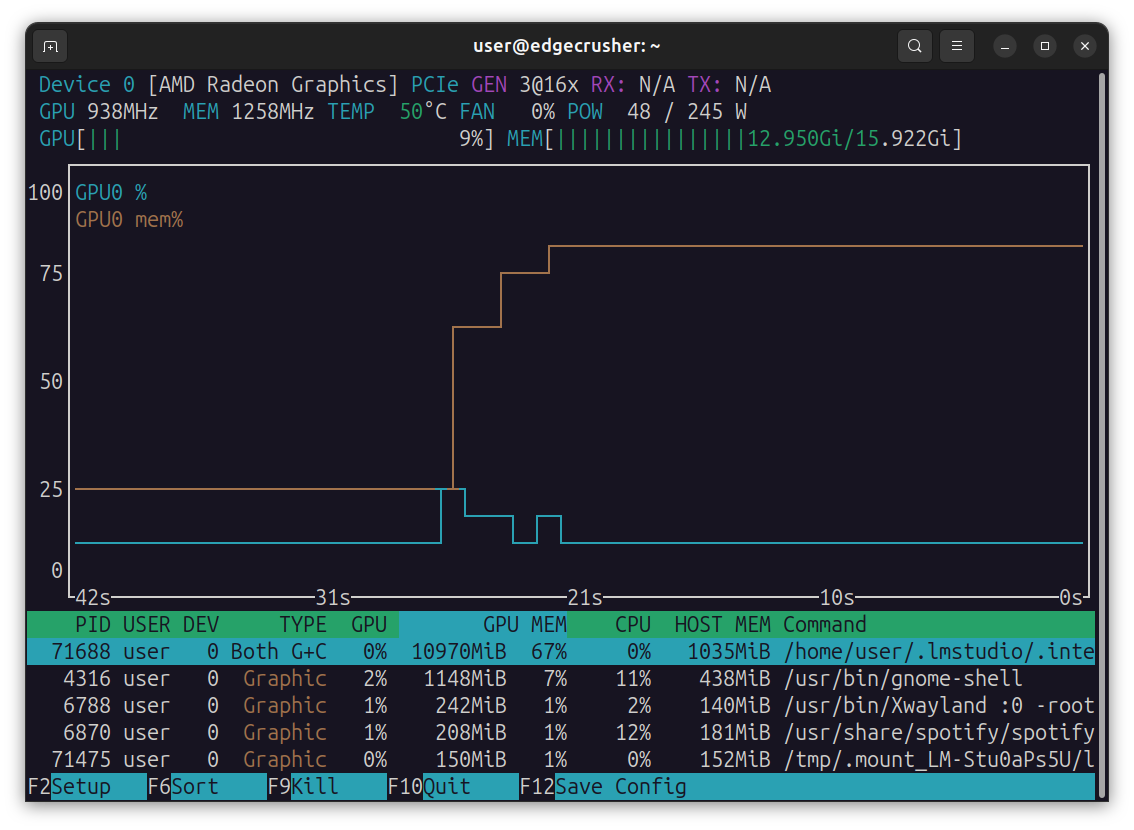

Monitoring GPU Load

Monitoring GPU utilisation and VRAM usage is useful to determine if you are exceeding the limits of your hardware. In general, once the amount of VRAM is exceeded the remaining parts of the model will be stored in main system RAM, which is significantly slower.

Personally, I think NVTop provides the most useful output.

sudo apt install nvtop

If your LLM software is using your GPU correctly, you should see VRAM utilisation increase significantly when the LLM is loaded.

In Conclusion

There are a lot of different applications that can be used to interact with LLM’s locally. Generally, these systems provide external API’s that can be queried over a network. Another popular alternative that’s worth investigating is gpt4all.